Regularizing Reinforcement Learning with State Abstraction

Abstract

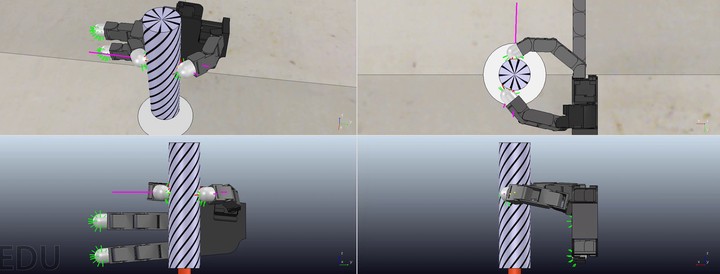

State abstraction in a discrete reinforcement learning setting clusters states sharing a similar optimal action to yield an easier to solve decision process. In this paper, we generalize the concept of state abstraction to continuous action reinforcement learning by defining an abstract state as a state cluster over which a near-optimal policy of simple shape exists. We propose a hierarchical reinforcement learning algorithm that is able to simultaneously find the state space clustering and the optimal sub-policies in each cluster. The main advantage of the proposed framework is to provide a straightforward way of regularizing reinforcement learning by controlling the behavioral complexity of the learned policy. We apply our algorithm on several benchmark tasks and a robot tactile manipulation task and show that we can match state-of-the-art deep reinforcement learning performance by combining a small number of linear policies.